As the smartphone market gets more crowded and more competitive, manufacturers are seeing their cameras as the distinguishing factor. And if you are reading this article, you surely have seen some weird trend cropping up in the smartphone cameras.

![]()

For the last couple of years, we have seen 12MP sensors become the norm in most smartphone cameras. But this year, we are suddenly seeing a jump to 40MP or 48MP sensors. So, many of you including us probably have many questions about these cameras. Are they better than the 12MP sensor used in most flagship devices? What is the advantage of having a larger MP count? How are these sensors different than the normal 12MP or 16MP cameras? We will try to answer most of your questions in this article.

The 48MP camera

The smartphone that has got most people talking about the 48MP camera in Nepal is Redmi Note 7. And some of you pointed out to us during the live unboxing of this phone that the processor in the Redmi Note 7, Qualcomm Snapdragon 660, does not support a sensor as large as this one.

So, how did Xiaomi manage to put the 48MP sensor in the smartphone then? Is it all just a hoax or a marketing gimmick? The answer is… it’s complicated.

You don’t need to know about it

The camera system on a smartphone is complex. There are many filters, and lenses, and a mindboggling post processing involved that contribute to the image that you see on your smartphone screen. So, for most of you out there, you shouldn’t even be thinking about the MP count on your smartphone camera because that’s not the only thing that affects the image quality. There are myriad of other factors. You should read reviews of the camera and look at photo samples to see if you like the photos or not. If possible, take photos with the phone yourself in the smartphone store.

Let’s go a bit deeper

But if you are still interested to understand how a 48MP camera works, I will tell you about it. When the light that flows through the camera lens, it is captured by a component inside the camera called the Image Sensor. The image sensor has millions of light capturing wells or cavities called photosites. Each of these photosites have specific locations and are arranged in rows and columns like a spreadsheet. Photosites are also called pixels (for cameras).

So, larger pixel count means that the camera has more of these pixels. What is the benefit of having more pixels? The distinction between the intensity of the light hitting the photosites become finer as you increase the pixels. This means better detail in the photos.

So that’s amazing. You should definitely increase the MP count on your smartphone camera if you can, right? Not so fast. There’s one problem.

The counter-argument

Smartphones don’t have much space in them to fit large sensors. So, when you increase the number of pixels on your camera sensor, the overall size of the sensor can’t change that much. This means that the size of the pixels themselves will shrink. While a normal 12MP camera may have the pixel size of 1.4 or 1.6 microns, the 48MP sensor can manage up to 0.8 microns.

What happens when the size of the pixel decreases? The amount of light that it captures also decreases. Here, many people give the example of a bucket capturing rain water. Suppose you are collecting rain water. If you have larger bucket, you’ll collect more water, if you have a smaller bucket, you’ll collect less water. That’s exactly what happens.

Less light means poor low light performance and difficulty capturing moving objects resulting in blurry images.

This is the reason why the smartphone camera world has made peace with 12MP sensors. They give the perfect balance between resolution and pixel sizes.

This is confusing

Then why is there suddenly this jump towards higher MP sensors? Why are we seeing better low light performance with these higher MP cameras rather than a poor one? This is all thanks to a new type of color filter.

Colors galore

I told you that when light enters through the lens, it is captured in the photosites of the image sensors. But I left one thing out there. Before the light reaches the image sensor, it passes through something called a color filter. And you probably guessed its purpose by its name. This filter is what gives color to the images. Without this filter, the image produced would be grayscale.

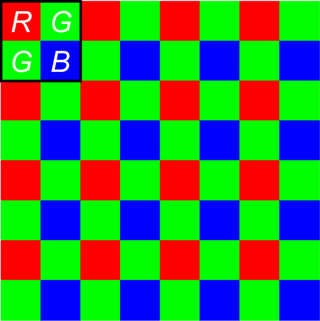

The most popular color filter used in smartphone and other digital cameras is called Bayer filter. It is represented as this array of red, blue, and green color filter. You can see that there’s more green than red or blue in this array. That is intentional because bayer filter was made to resemble the human eye and human eye is more sensitive to green.

Anyway, as there is a color filter above the sensor, not all light can be captured by the image sensor. Only the light that the particular unit of the color filter lets in will be captured by the sensor. That means the sensor will only capture 1/3rd of the total light that comes through the lens. The pixel below the blue filter will only capture the intensity of blue light. The information on the intensity of the Red light and green light in the white light is lost.

But we see a full-color picture on our screens. How is that possible if 2/3rd of the light is lost? We now enter the realm of post-processing. The phone approximates the intensity of the other two lights in a particular photosite. There are many algorithms to do this and this whole process is called demosaicing.

Finally (the answer)

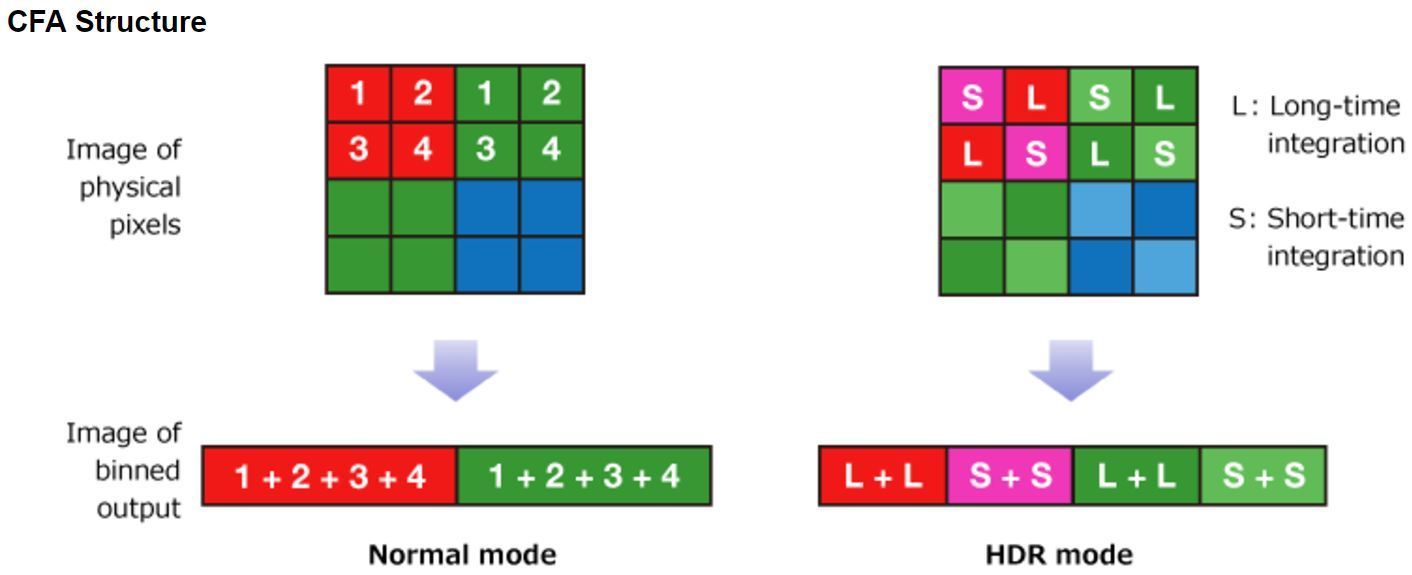

What these new 48MP sensors have is a new color filter called quad-Bayer filter. Just see the picture below and you’ll understand. In the quad Bayer setup, instead of having alternating color filters above each pixel, you have four pixels grouped in a square share the same color filter.

What is the purpose of this? When you increase the pixel count, you decrease its size. And that adversely affects the quality of the photos. What these new large sensors are doing is that they are combining the data from the four different pixels under the same color filter into a single pixel. This can be done thanks to quad Bayer filter. By combining raw data from four pixels into one, we get higher amount of light captured in a single pixel while reducing the noise generated (more samples so less noise). This process of combining the data of two or more pixels into one is called Pixel binning.

This eliminates the defect of having a small pixel size. But this also effectively produces 1/4th resolution image. So, a 48MP sensor produces 12MP images and this is what is happening with phones like Redmi Note 7.

The result is better dynamic range, better contrast, and better low-light performance. Phones like the P30 pro and Mate 20 pro take this even further by exposing two pixels in the square more than the other two pixels. They combine this data to produce better HDR images. This process seems to have real merits as the cameras from Huawei have been lauded by many reviewers as the best in the market.

Outro

Of course, we haven’t covered every minute detail about pixel binning in this article. If you want to dig deeper into this fascinating world of digital photography, you can do so by all means. I will list leave some links of articles and videos that helped me when writing this article. You will be spellbound by the near-magical behind the scenes of digital photography.

https://fossbytes.com/redmi-note-7-48mp-camera-cons-review/

https://www.androidauthority.com/what-is-pixel-binning-966179/

https://www.ubergizmo.com/what-is/pixel-binning-camera/

https://www.digit.in/features/mobile-phones/explained-pixel-binning-in-smartphone-camera-sensors-43278.html

https://www.ubergizmo.com/articles/quad-bayer-camera-sensor/

https://web.stanford.edu/group/vista/cgi-bin/wiki/index.php/Pixel_Binning

https://www.gsmarena.com/explaining_huawei_p20_pros_triple_camera-news-30497.php

https://en.wikipedia.org/wiki/Bayer_filter

-

Infinix Mobile Price in Nepal (April 2024 Updated)Infinix, founded in 2013, is a Hong Kong-based smartphone maker that focuses on making budget…

-

Infinix Hot 40i with 32MP Front Camera Launched in NepalHIGHLIGHTS Infinix Hot 40i price in Nepal starts at Rs. 14,999 (4/128GB). The device features…

-

Gravity Bassbuds Max with ANC Launched in Nepal at a Discounted PriceHIGHLIGHTS The Gravity Bassbuds Max price in Nepal is Rs. 3,500. The first 100 customers…